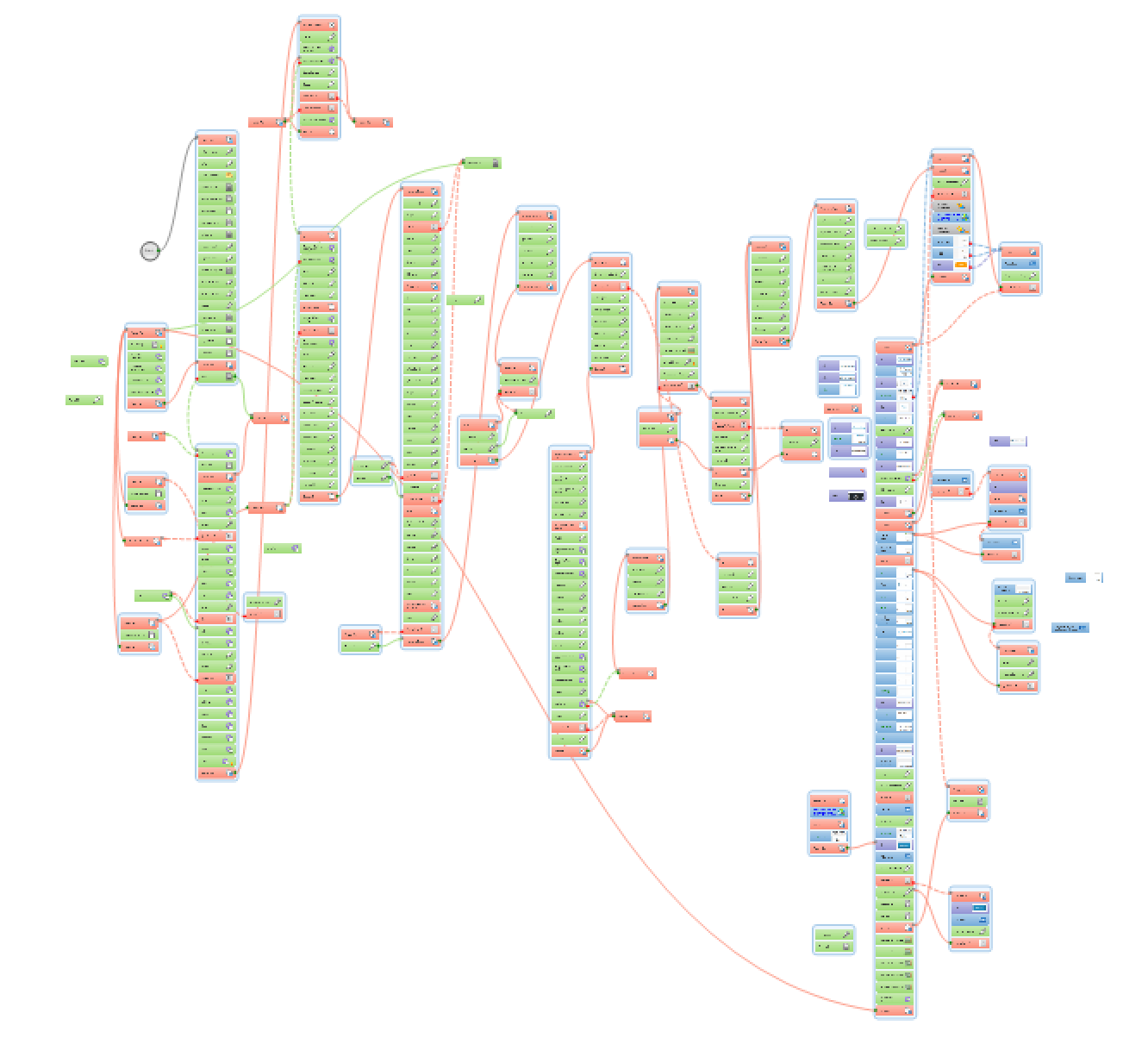

Having read my previous post about how I found myself messing around with visual programming as an online marketer, you might have wondered: and what would be the everyday uses of those scripts when dealing with ad campaigns and web sites? Well, let me share some examples from the last few years I was working with these web automation tools to illustrate this:

Overcoming limitations of AdWords: finding more manual display network placements

Have you ever wondered whether AdWords will suggest you all the relevant display network placements, Youtube videos or Youtube playlists when you try to add them by entering relevant keywords? Well, the answer is that relying only on the ad management interface of AdWords, you will miss a lot of relevant placements. Fortunately, with some web automation skills, you can quickly build a script which finds even more relevant placements for instance by executing site searches on Youtube for a certain list of keywords, automatically pressing the next-next buttons and generating a simple list of URLs based on what has been displayed on the search result pages.

Analyzing your data the way you want: exporting external link data in a meaningful format

Although Google Webmaster Tools (a.k.a. Google Search Console) lets you browse through a huge list of web pages where a certain site of yours is linked, you cannot really export that data in a usable format, such as linking domain, linking web page, linked page in the same row. Although you could click through the list of linking domains, then the list of linking pages and export a bunch of tables based on this hierarchy, this sounds like a kind of repetitive task which can be fairly easily automated. Adding a few more steps like scraping the title of the linking page plus the anchor of the link, you can end up having a really informative list of your external links – at least of those which are displayed by Google.

Analyzing your data the way you want: obtain raw engagement data

While Facebook shows you some insights about how your pages or posts are performing, you cannot simply grab the raw data of these statistics: such as the number of visitors liked or shared certain posts in a given timeframe. But you can build a script which automatically scrolls and scrolls and scrolls – and extracts any data about the posts displayed. Having all the data in a spreadsheet format, you can visualize it the way you want. As a bonus: you can even do this with your competitors’ Facebook pages.

Automating repetitive tasks: checking link building results

Way back when we have been building tens of thousands of links on web directory sites, no link submission software could provide us with detailed and reliable data about which directories had accepted and published our link submissions and which had not. Without knowing how many links were eventually generated and where were those links located, we could not create detailed reports for our clients. On the other hand, the biggest problem of directory link building was that you never knew at the time of submission where the submitted link would be displayed in the directory, so the challenge was not only going through a list of URLs and see whether our link was found on those pages or not, but you had to look through the entire directory to figure out where exactly that link was. All in all, this task was more complicated than curl or wget a list of URLs and grep the results. Before I knew how to automate this process with visual scripting, we had to do this highly repetitive task by hand – so scripting could save us a lot of manual work.

Process spreadsheet data: check and merge what’s common in two tables

When you have to work with email lists and related data coming from different sources, you could quickly diff or merge two .csv files with Unix command line tools. But sometimes not everyone in your organization possesses those “geeky” skills to fire up awk for that, and many times you are also too lazy to find the best solution on StackOverflow. In these cases, with automation software, you can even create an .exe file with an easy to use interface where two files and a few more parameters are asked, such as which column’s data should be matched in the other spreadsheet to merge a table with the unified rows based on those matches – or whatever you can achieve with regular expressions, if / then statements and loops.

Extracting structured data from unstructured source: list of products in a website

Unfortunately, still there are many web shop owners who are running their sites based on proprietary webs hop management systems, which are not prepared for simply exporting the list of products from, or not in the appropriate format, with all the desired data, etc. In these cases, it is very handy if you can quickly build a script which scrapes the entire webs hop and outputs a spreadsheet of every product, containing all the important attributes and product data. Based on the result, you can start working on either the on-site SEO or importing those lists to Google AdWords of Facebook Ads.

Migrating web sites: exporting and importing from/to any CMS

There are quite a few ways of importing data into WordPress, but still, you might miss some features which can be normally accessed only if you upload the content manually, such as attaching images to a certain post or set the featured images. Not to mention that before that, you’ll have to get to the point of already having the data extracted from the old web site to a structured format such as XML and CSV. As many older CMSes and proprietary content management systems do not have such data exporting features, this part of the job could be also quite complicated, if not impossible. On the other hand, with some web automation skills you can extract any data in any format from the original site and imitate a human being filling out the corresponding data simply automating the new site’s administration interface – you don’t have to rely on any export-import plugin’s features – and shortcomings.

Web spamming: black hat SEO, fake Facebook accounts…

The tools I’m using for automating the above tasks are originally meant for creating accounts, posting content to a wide range of sites: thus spamming the entire web — but this is something I have never used these tools for – believe it or not 🙂

Coming next: